LLM Evaluation: Approaches, Metrics, and Best Practices

Large Language Model (LLM) evaluation is the process of measuring and assessing how well a language model performs on defined criteria and tasks. In practice, this means testing an LLM’s outputs for quality (accuracy, coherence, etc.), safety, and alignment with intended use. Rigorous evaluation is not just a technical checkbox, it is vital to ensuring these AI models are reliable, accurate, and fit for business purposes. Without proper evaluation, LLMs can generate biased, incorrect, or even harmful content.

LLM evaluation underpins trust and effectiveness in AI solutions. For example, financial firm Morgan Stanley attributes the success of its advisor-facing AI to a “robust evaluation framework” that ensures the model performs consistently at the high standards users expect. In other words, systematic testing gave management the confidence that the AI’s answers would be reliable in practice. With generative AI being rapidly adopted by 65% of organizations worldwide, having a structured evaluation process has become a business imperative. It’s the mechanism that lets organizations harness AI’s benefits while controlling risks. As Gartner emphasizes, companies should evaluate LLMs based on business impact, ensuring the model can scale, stay efficient, and deliver long-term value, rather than just chasing the latest AI hype.

How to Evaluate LLM Models: Approaches and Strategies

1. Laboratory Testing vs. Real-World Assessment

When evaluating LLMs, organizations typically employ a mix of laboratory testing and real-world assessment to get a complete picture. A laboratory evaluation is like a controlled experiment, where an LLM might be evaluated on standalone metrics using prepared datasets or prompts. In this scenario you might prompt the model with 1,000 diverse questions and measure accuracy, or test its output against a known reference. This is useful for controlled comparisons: for example, checking how often the model produces the correct answer on a set of Q&A pairs, or measuring perplexity (a metric of how well the model predicts text) on a validation corpus. Such model evaluation focuses on the LLM in isolation, assessing its core capabilities in a repeatable way. This gives quantitative benchmarks and helps tune the model before users ever see it. However, lab tests alone are not enough.

The next step is bringing the model into a realistic usage scenario: the system evaluation. Real-world assessment exposes the model to the unpredictability of actual usage. System evaluation looks at the LLM’s performance when it’s integrated into an application or workflow, facing real user inputs and interacting with other components. This might involve a pilot deployment, A/B testing the LLM with a subset of users, or running the model in a simulation of the business process.

Both approaches are important: lab tests ensure a baseline of quality under known conditions, and live tests catch issues that only emerge in practice (like odd edge-case behaviors or performance bottlenecks). In Morgan Stanley’s case, the team tested GPT-4 on real use cases with internal experts in the loop before fully deploying it. They ran domain-specific evaluation tasks such as summarizing financial documents, then had human advisors grade the AI’s answers for accuracy and coherence. This blend of offline metric checks and human-in-the-loop trials allowed them to refine prompts and improve the model’s output quality in a targeted way.

2. Standalone Model vs. LLM-Powered Application

Another strategic consideration is whether you are evaluating a standalone model vs. an LLM-powered application. Testing a raw model (say via an API) is different from testing it inside an app or product. Standalone model testing might involve tasks like: given a prompt, does the model generate the expected completion? You can automate much of this with predefined queries and known answers. But when that model is part of an application (a chatbot, a document search tool, etc.), you need to evaluate the end-to-end system: Can the app’s interface properly capture user intent and feed it to the LLM? Does the LLM’s answer integrate correctly into the app’s responses or database? And is the overall user experience smooth and effective (reasonable response time, understandable output, etc.)? System evaluation often brings in additional metrics, for example, user experience metrics (like user satisfaction ratings, or the time it takes for a user to get a useful answer) and robustness tests (throwing in noisy or adversarial inputs to see if the system still behaves reliably. By incorporating both model-centric and system-level evaluations, companies ensure they are not just choosing a technically proficient model, but also building an AI solution that is practical and user-friendly in production.

3. Automated evaluation techniques vs. human assessment

This is another crucial aspect of LLM evaluation strategy. Automated evaluation involves using algorithms and metrics to judge the model’s outputs. For example, one might use an automatic metric to compare the model’s answer to a reference answer, or run a script to check if the output contains certain forbidden words. Automated tests are fast, scalable, and consistent, and they’re very useful for things like regression testing (checking if a new model version has gotten better or worse on specific tasks). However, automated metrics often can’t tell the full story. They might miss nuances like whether an answer is well-explained or appropriate in tone. As a result, human evaluation remains indispensable for nuanced judgment. Human evaluators can assess things like answer helpfulness, coherence of a narrative, or adherence to subtle conversational norms. For instance, humans can tell if a model’s explanation makes sense and is convincing, whereas an automated metric might only check if certain keywords are present.

In practice, the best strategy is often a combination of automated and human evaluations. Automated checks can handle the heavy lifting at scale (e.g., running thousands of prompts through the model to flag obvious errors or measure response length, etc.), and they can pre-screen outputs to identify promising vs. problematic ones. After that, human judges can focus on evaluating the quality in depth for a smaller set of outputs. Many organizations create evaluation sets and have human reviewers rate each model’s responses on dimensions like correctness, clarity, and appropriateness. These human ratings become the “gold standard” to compare models or fine-tune prompts. Notably, some newer approaches use LLMs themselves as evaluators (“LLM-as-a-judge”). In this approach, an AI model is used to critique or score the outputs of another model. Interestingly, studies have found that these AI judges can agree with human evaluators over 80% of the time, suggesting a scalable proxy for human judgment. While not perfect, LLM-based evaluators are being used to augment evaluation.

In summary, evaluating an LLM is a multi-faceted process: start in the lab with controlled tests on the model itself, then move to real-world scenario tests to see how it behaves in context. Use automated metrics to get objective, repeatable measurements, and layer in human assessments to capture quality and nuance. And importantly, evaluate not just the model’s raw output, but the performance of the entire AI-powered solution in delivering value to end-users.

{{cta="/cta/automate-quality-management-with-ai-agents"}}

How to Evaluate LLM Performance: Key Metrics and Benchmarks

To systematically evaluate LLM performance, organizations rely on a set of key metrics that capture different dimensions of output quality. Some of the most important metrics and evaluation criteria include:

1. Accuracy and Factual Correctness

At the core, we want to know if the LLM’s output is correct. Accuracy can mean the answer is factually right or that it fulfills the user’s request correctly. For instance, if the task is a math word problem or a yes/no question, did the model get it right? In generative tasks, factual accuracy often relates to avoiding “hallucinations”: making sure the model’s statements are grounded in truth or provided source data. Gartner refers to this as accuracy and groundedness, i.e. the response should be fact-based and precise. Some ways accuracy is measured include exact match scores for Q&A, or verification against known facts. For example, an LLM retrieving information from a company's knowledge base might be evaluated on what percentage of its answers contain only correct, verifiable facts.

2. Coherence and Fluency

Coherence measures how logically consistent and well-structured the output is, while fluency measures grammatical and natural language quality. An LLM could be factually right yet produce an incoherent explanation. Evaluators look at whether the response flows logically, stays on topic, and is easy to understand. These qualities are usually judged by humans, but there are proxies like measuring perplexity (lower perplexity often correlates with more fluent text) or using metrics that compare to human-written text.

3. Relevance

Relevance evaluates how well the model’s output addresses the prompt or user’s query. An answer might be correct in some sense, but if it’s not on-point for the question or context, it’s not useful. LLM evaluations often check that the response stays on task. This can be measured by human raters scoring “How relevant is this answer to the question?” or by automated means like checking that key terms from the query appear in the answer. Gartner highlights relevance and recall as key criteria: the output should align with business needs and cover the necessary information.

4. Compliance and Safety

These are critical qualitative metrics, especially for enterprise and public-facing applications. Compliance means the output adheres to any applicable laws, regulations, and company policies. Safety involves checking that the model’s output is not harmful or offensive. This includes absence of hate speech, harassment, or biased language. It also includes avoiding unsafe instructions (e.g. not giving advice that could cause harm). Many organizations now treat these as formal metrics, running tests for banned content or bias. For example, an LLM might be evaluated on a toxicity score (how much offensive content is generated) or undergo bias testing by inputting prompts that reference different demographic groups and seeing if outputs are consistently fair. In Gartner’s framework, safety and bias detection are explicit evaluation dimensions, where models are checked for problematic outputs and those risks are mitigated.

{{cta="/cta/validate-certificates-instantly-with-ai-agents"}}

5. Efficiency and Performance

Beyond the content of outputs, organizations also evaluate how efficiently the model operates. This includes speed (latency): how quickly does the model produce a response?; and scalability: can it handle many requests or large documents without timing out or costing a fortune? Efficiency can also refer to computational cost: is the model using reasonable resources per query? These factors directly impact user experience and business viability. For instance, if an AI assistant takes 30 seconds to answer, users will be frustrated. Or if each query costs too much in cloud computing, it won’t be cost-effective at scale. Thus, metrics like average response time, throughput (requests handled per second), and even cost-per-query are monitored. Gartner notes that evaluating LLMs for “scalability, efficiency and long-term value” is crucial for alignment with business goals. In practice, teams might set targets like: 95% of responses should return in under 2 seconds, or the system should support 100 concurrent users without degradation.

6. User Satisfaction and UX Metrics

This is a qualitative outcome metric: are users happy with the answers and experience? It can be measured via surveys (“rate this answer 1–5”), feedback buttons (thumbs up/down), or analyzing usage patterns (do people abandon the chatbot or keep engaging?). A model might have decent objective performance but still not meet user needs. Maybe its tone is off-putting or it doesn’t format the answer as the user expects. So, collecting human feedback in real deployment is key. Some companies use NPS (Net Promoter Score) or CSAT (customer satisfaction) specifically for their AI features. Others look at retention. High user satisfaction often correlates with metrics like coherence and relevance, but it’s an important independent check.

7. Contextual Understanding

This refers to the model’s ability to understand nuance, maintain context in a conversation, and demonstrate “common sense.” It’s harder to quantify but critical in complex tasks. For example, in a multi-turn dialogue, does the LLM remember what was said earlier and respond accordingly? Does it understand implied meaning or subtle requests? Evaluators might test this by scenario-based prompts or role-play conversations and see if the model stays consistent. Another aspect is handling domain context – if the model is deployed in a specific domain (say legal or medical), can it use the jargon correctly and stick to domain facts? Organizations sometimes create custom evaluation sets with realistic scenarios to assess contextual understanding. For instance, they may simulate a full customer service call in text and see if the AI can follow along naturally. This is often evaluated qualitatively by experts: they will score transcripts for how well the AI maintained context and delivered appropriate solutions.

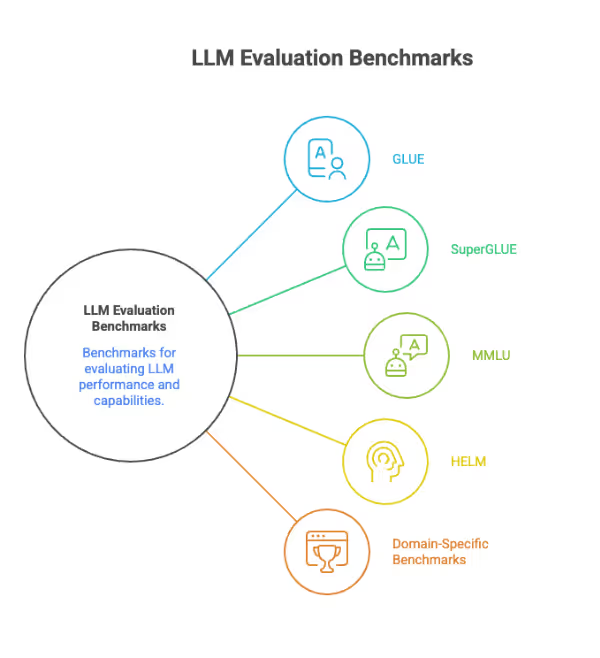

To measure these various aspects, the AI community has developed recognized benchmarks that serve as performance yardsticks. Widely used benchmarks include:

GLUE (General Language Understanding Evaluation)

A suite of tests for language understanding, covering tasks like sentiment analysis, question answering, textual entailment, etc. GLUE provides a broad measure of how well a model grasps meaning and context across different tasks. Scoring highly on GLUE means the model can handle a variety of NLP tasks at a level comparable to human performance on those tasks. Enterprises and researchers use GLUE to compare models in a standardized way: it’s like a common scoreboard for language model capability.

SuperGLUE

An updated, more challenging version of GLUE with harder language tasks (understanding cause-effect, nuanced common sense reasoning, etc.). Top models are often benchmarked on SuperGLUE to demonstrate advanced understanding. It’s relevant in evaluation when choosing or fine-tuning an LLM: a model performing well on SuperGLUE is likely more “comprehension-savvy”.

MMLU (Massive Multi-Task Language Understanding)

A benchmark that tests an LLM’s knowledge and reasoning across 57 subjects, from history and literature to math and biology. It’s essentially an academic exam for models. MMLU is useful to evaluate breadth and depth of knowledge: for example, if you’re adopting a model for a wide-ranging knowledge assistant, you’d want to see a strong MMLU performance to ensure it can handle diverse topics. High scores on MMLU indicate the model can recall facts and solve problems in many domains, which is a proxy for generality and reasoning power.

HELM (Holistic Evaluation of Language Models)

This is a comprehensive benchmarking framework from Stanford that evaluates models on a wide array of metrics and scenarios for a more holistic view of performance. By covering 7 primary metrics (accuracy, robustness, calibration, fairness, bias, toxicity, efficiency) and 26 scenarios, HELM gives organizations a multidimensional report card of an LLM. For instance, HELM will test a model’s behavior on tasks involving potential misinformation or biased content. Or a business might use HELM results to identify if a model is strong in raw accuracy but weak in fairness or vice versa, informing where to put additional safeguards or training.

Domain-Specific Benchmarks

In addition to general benchmarks, there are tests tailored to specific industries or tasks. For example, the medical domain has benchmarks like PubMedQA for biomedical question answering, or MMLU’s subset of medical science questions. The legal domain might use a benchmark like a bar exam question set or CaseLAW summaries to see if an LLM understands legal text. If you’re deploying an LLM in such a specialized field, you’d evaluate it on these industry benchmarks to ensure it meets the domain’s accuracy and reliability bar.

Using established benchmarks provides a reference point: you can compare your chosen or fine-tuned model against known scores (e.g., “Our fine-tuned model achieves a ROUGE score on summarization close to the state-of-the-art” or “Model X outperforms Model Y on MMLU by 5 points, so it has better general knowledge”). However, it’s equally important to supplement benchmarks with custom tests aligned to your specific use cases. Benchmarks can tell you a model’s general capability, but your own metrics (like “success rate in answering our customers’ top 100 questions”) will tell you if it’s truly performing for your business. In practice, organizations use a combination: they watch benchmark results for a high-level picture and use them to shortlist models, then do intensive internal evaluations on their particular tasks to make final decisions.

Best Practices for Effective LLM Evaluation

Drawing from these insights and industry guidance, several best practices have emerged to ensure LLM evaluation is done effectively:

1. Define clear objectives and success criteria from the start

Before you even evaluate, be explicit about what “good performance” means for your application. Tie the LLM’s evaluation metrics to business objectives. For example, if the goal is to reduce customer support resolution time, your evaluation might prioritize the model’s correctness on support FAQs and its ability to decide when to hand off to a human. Having clear, use-case-driven metrics focuses the evaluation on what truly matters.

2. Use a mix of benchmark tests and custom tests

Leverage well-known benchmarks to get a sense of a model’s capabilities, but don’t stop there. Customize evaluation to your domain and data. If you’re a legal firm, include legal case summaries in the eval; if you’re building a coding assistant, include a suite of coding tasks and bugs to fix. Best practice is to create a representative test set that reflects real tasks and edge cases your model will face.

3. Involve the right people (human-in-the-loop)

Evaluation shouldn’t be left solely to data scientists. Multi-disciplinary involvement is a best practice. Domain experts can judge correctness and relevance in context (e.g. a medical doctor reviewing a medical answer), compliance officers can spot any outputs that raise regulatory concerns, and end-users or UX experts can assess whether the tone and clarity meet user expectations. Having humans “in the loop” is especially crucial for subjective aspects like usefulness, empathy, or adherence to company values. Also ensure roles and responsibilities in the evaluation process are clear – e.g., who signs off that the model is ready to go live? Deloitte recommends defining stakeholder roles across the AI lifecycle, so everyone knows their part in validating and approving the model’s performance.

4. Establish a rigorous testing protocol

Don’t evaluate only on ideal inputs. Intentionally test the model’s limits and failure modes. This is often called red teaming. Best practices include testing for adversarial or tricky inputs: ambiguous questions, inputs with false assumptions, attempts to get the model to produce disallowed content. By probing the model in this way, you can identify where it might go off-track under malicious or unusual use.

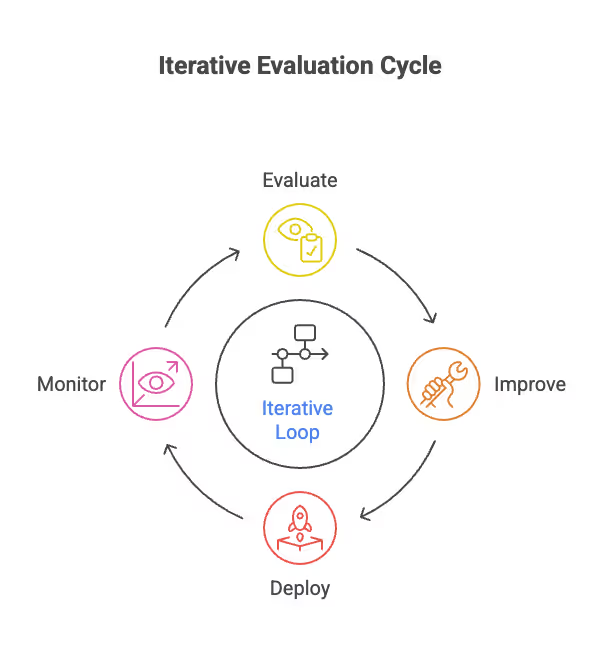

5. Continuously monitor and evaluate in production (iterative evaluation)

LLM evaluation is not a one-and-done task. Once the model is deployed, real-world conditions will continue to evolve: user behavior might change, new use cases emerge, or the model might drift in performance over time. Set up ongoing monitoring to track key metrics in production. For example, measure the rate of disputed answers or user dissatisfaction signals each week. Many organizations implement automated monitoring: logging model outputs and scanning them for anomalies or policy violations, and scheduling periodic re-evaluation against a test set. Adopting an iterative loop of evaluate → improve → deploy → monitor → evaluate... ensures the model maintains or improves its performance over time rather than “drifting” or becoming stale. It also allows incorporation of new success criteria as business needs change.

6. Benchmark and recalibrate periodically

It’s wise to benchmark your model’s performance over time and against alternatives. This could mean running the same standardized test every quarter to see if there’s regression, or comparing your model to a new model on the market. Benchmarking keeps you informed of where you stand. Continuous benchmarking in this way acts as an early warning system for model drift or emerging issues. It also helps quantify the impact of any model updates or fine-tuning you do: always evaluate an updated model on the same metrics/benchmarks to ensure the change was an improvement (and didn’t introduce new problems).

7. Document and govern the evaluation process

Treat evaluation as a formal component of AI governance. Keep records of evaluation results, decisions made, and any accepted trade-offs. This documentation is valuable for compliance (proving due diligence in model validation) and for internal learning. It’s a best practice to have an evaluation report for any major model deployment that summarizes: what was tested, by whom, results, and recommendations. As part of AI governance, leadership should be involved in reviewing evaluation outcomes, much like a quality assurance sign-off.

8. Update evaluation criteria as the AI and use cases evolve

Over time, you may discover new metrics are needed. Perhaps user feedback reveals that response consistency (not giving different answers to the same question) is a concern: you might then add a “consistency check” to your evaluation. Or your application expands to a new market, so you need to evaluate performance in another language. Best practices entail regularly revisiting your evaluation strategy to make sure it covers all relevant angles. The field of LLMs also changes, with new benchmarks and techniques emerging (for example, new methods to evaluate reasoning or truthfulness). Staying up-to-date and incorporating those can strengthen your evaluation framework. In essence, be ready to evolve your evaluation in response to both your business context and the external advances in AI evaluation methods.

By following these best practices organizations can significantly increase their chances of deploying LLMs successfully. A robust evaluation regimen not only catches issues early but also builds a stronger understanding among the team of how the AI behaves, which is invaluable for maintenance and improvement.

Conclusion: Making LLM Evaluation Work for Your Business

As we’ve seen, evaluating large language models is a multi-dimensional effort, but it’s one that pays dividends in accuracy, reliability, and ultimately user and business success.

In conclusion, rigorous LLM evaluation is the backbone of trustworthy and effective AI adoption. It turns the art of working with advanced AI into a science of measuring and improving outcomes. Organizations that invest in strong evaluation practices will find that their AI initiatives are far more likely to meet their targets, delight users, and avoid unintended consequences. In a time where AI capabilities are accelerating, it is the discipline of evaluation that enables responsible scaling, allowing businesses to confidently embrace generative AI innovations, knowing that performance and risks are under control. By establishing a robust evaluation process, one that is continuous, comprehensive, and aligned with business objectives, companies can unlock the full potential of LLMs while safeguarding quality and trust, ultimately gaining a competitive edge in the AI-powered future.

FAQ

LLM evaluations are processes used to test and measure how well large language models perform. They help determine whether a model gives accurate, helpful, and appropriate responses. This can include lab tests using standard benchmarks, automated checks for speed or quality, and human reviews for clarity, relevance, or tone. Together, these methods show how reliable and useful the model is in real-world scenarios.

Evaluating LLMs is challenging because their outputs are complex and open-ended - they aren’t always black or white. A response might be factually wrong but sound convincing, or it might be technically correct but irrelevant or confusing. Automated tools can catch basic errors or measure consistency, but they often miss deeper issues like bias or nuance. Human judgment is still needed, especially for more complex tasks, but it takes time and depends on context and expertise.

LLM evaluation is key to building AI you can trust. It helps teams understand how well a model performs, where it falls short, and how to improve it. Good evaluation prevents harmful outputs, saves time, and builds confidence, especially when using LLMs in business-critical areas like customer service, legal support, or compliance. Without strong evaluation, you risk poor performance, frustrated users, or serious mistakes.