Ethics for AI: Governing Workflows for Secure Automation

Artificial intelligence (AI) is revolutionizing how organizations operate, promising efficiency and innovation on a global scale. In fact, over 70% of executives believe AI will be the most significant business advantage for the future. However, alongside this promise comes a profound responsibility: ethics for AI.

AI ethics (also called ethical AI or responsible AI) refers to the principles and practices that ensure AI systems operate in a morally sound, fair, and trustworthy manner. As AI becomes integral to decision-making and automation workflows, decision-makers must understand and govern these ethical considerations to achieve secure and sustainable automation.

Ethical lapses in AI can carry heavy consequences for businesses and society. Unchecked AI systems have produced biased results, invaded privacy, and even made opaque decisions with far-reaching impact. Such outcomes erode trust and invite reputational damage.

What is AI Ethics?

Source: Freepik

AI ethics refers to the broad field and set of guidelines for ensuring AI technologies are developed and used in a morally responsible way. IBM defines AI ethics as “a multidisciplinary field that studies how to optimize the beneficial impact of artificial intelligence (AI) while reducing risks and adverse outcomes”. It’s more than compliance; it’s about aligning AI with human values and societal norms, and applying our sense of right and wrong to the new frontier of AI technologies. In practice, this means finding ways to harness AI for good (improving efficiency, decision quality, customer experience, etc.) without causing harm through misuse, bias, or unintended consequences.

It’s important to clarify terminology: “ethical AI” typically refers to AI systems designed and used in an ethical manner, whereas “AI ethics” or “artificial intelligence ethics” denotes the overall domain of study and policy setting around AI’s moral implications. Simply put, ethical AI is the goal, and AI ethics provides the framework and tools to achieve that goal.

Understanding AI Ethics

Discussions around artificial intelligence and ethics encompass numerous issues. Common topics include avoiding bias and discrimination, ensuring transparency and explainability of AI decisions, safeguarding privacy and data rights, maintaining human accountability for AI actions, and preventing misuse of AI (such as malicious applications or violation of human rights). For example, AI ethics considers questions like: How do we prevent an AI-driven hiring system from unfairly filtering out candidates based on gender or race? How do we make sure an AI that helps diagnose illnesses is transparent about its reasoning? Who is responsible if an autonomous vehicle causes an accident?

Artificial intelligence ethics also imply governance: the organizational policies, values, and controls that guide AI behavior. Ethical AI requires companies to extend their ethics and compliance programs to cover AI systems throughout their lifecycle: from design and data selection to model training, deployment, and monitoring. This ensures that AI solutions adhere to the same standards of integrity and responsibility expected in human decision-making.

Failing to govern AI ethically can hurt an organization’s brand, invite lawsuits or fines, and undermine stakeholder confidence. On the positive side, organizations that prioritize artificial intelligence ethics are finding it pays off: research indicates that establishing digital trust (through robust privacy, security, and responsible AI) correlates with stronger growth. Society at large also benefits when AI is developed and used ethically, as it preserves fundamental rights, prevents discriminatory outcomes, and fosters public trust in AI-driven innovations.

Over the past few years, AI ethics has rapidly gained prominence. Once the realm of researchers and philosophers, it is now a top concern for industry and governments. According to a recent analysis, “AI ethics has progressed from centering around academic research and non-profit organizations [to] big tech companies... assembling teams to tackle ethical issues”. Companies like IBM, Google, and Meta have established AI ethics boards or offices to guide their product development. In parallel, global forums and national agencies are actively working on AI ethics guidelines. For instance, UNESCO has coordinated international efforts on AI ethics, and many countries have formed advisory councils on the topic. All this reflects a growing consensus: AI cannot be left to developers alone; stakeholders from ethicists to policymakers and business leaders must collaborate to ensure AI is ethical and trustworthy by design.

“AI ethics has progressed from centering around academic research and non-profit organizations [to] big tech companies... assembling teams to tackle ethical issues”

Source: Coursera

Why AI Ethics Matters in Business and Society

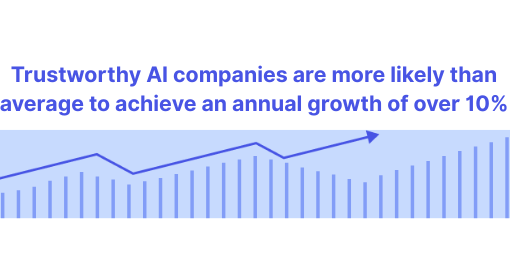

AI ethics is not just a matter of altruism or public relations – it has tangible importance for both business outcomes and broader societal well-being. In the business arena, ethical AI is quickly becoming a strategic imperative. A McKinsey global survey found that building digital trust (including trustworthy AI and transparency around data use) correlates with growth: organizations strong in digital trust capabilities are far more likely to achieve annual growth over 10%. Consumers increasingly vote with their wallets based on a company’s ethical stance.

Source: created by turian, based on data from McKinsey.

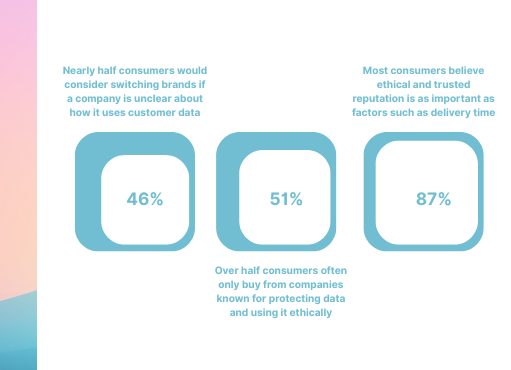

Transparency is especially critical:

- Most consumers say it’s important for companies to be clear about their AI and data policies, and nearly half will consider switching brands if a company is unclear about how it uses customer data.

- Over half of consumers often only buy from companies known for protecting data and using it ethically.

- Many consumers believe that ethical and trusted reputation is as important as common purchase decision factors, such as delivery time.

Source: created by turian, based on data from McKinsey.

These trends show that companies have a business incentive to get AI ethics right: it builds brand loyalty, mitigates risk, and can even be a market differentiator.

On the flip side, neglecting AI ethics can lead to loss of trust, regulatory sanctions, and public backlash. A lack of ethical oversight can quickly become a legal liability. For instance, data privacy violations or discriminatory outcomes can result in lawsuits and fines. IBM’s experts caution that ignoring AI ethics invites “reputational, regulatory and legal exposure” for companies.

From a societal perspective, artificial intelligence ethics is critical to ensure AI benefits everyone and does not deepen inequalities. AI systems, if left unchecked, could perpetuate historical biases or make decisions that inadvertently harm vulnerable groups. UNESCO emphasizes that AI’s rapid rise “raises profound ethical concerns” because AI systems “have potential to embed biases… resulting in further harm to already marginalized groups”. For example, a company using an AI system to screen job applicants which is trained on historical hiring data, which mostly reflects past hiring patterns and biases. As a result, it might systematically downgrade or exclude candidates from certain demographic backgrounds, even if unintentionally. For instance, it might disproportionately reject qualified women or ethnic minorities because historically, these groups were underrepresented in the company’s workforce. This kind of scenario illustrates why society needs ethical guardrails for AI.

As UNESCO’s Assistant Director-General Gabriela Ramos put it, “without the ethical guardrails, [AI] risks reproducing real world biases and discrimination, fueling divisions and threatening fundamental human rights and freedoms”.

Finally, regulators and governments around the world are responding to public concern by crafting rules to enforce ethical AI practices. The direction is clear: organizations will soon be legally required to implement many AI ethics principles. Early adopters of ethical AI are effectively future-proofing their operations against coming regulations. They also stand to shape the standards and earn consumer trust in the meantime.

In summary, AI ethics matters because it builds trust and protects against risks that could derail AI initiatives. Ethical AI fosters acceptance of AI among users and society, enabling organizations to fully realize AI’s benefits without provoking the “dark side” of technology.

As evidence of the growing importance of this issue, 75% of executives worldwide ranked AI ethics as “important” in 2021, a big jump from under 50% in 2018. Ethical AI has moved from a niche consideration to a mainstream mandate in just a few years.)

Core Ethical Principles for AI Implementation

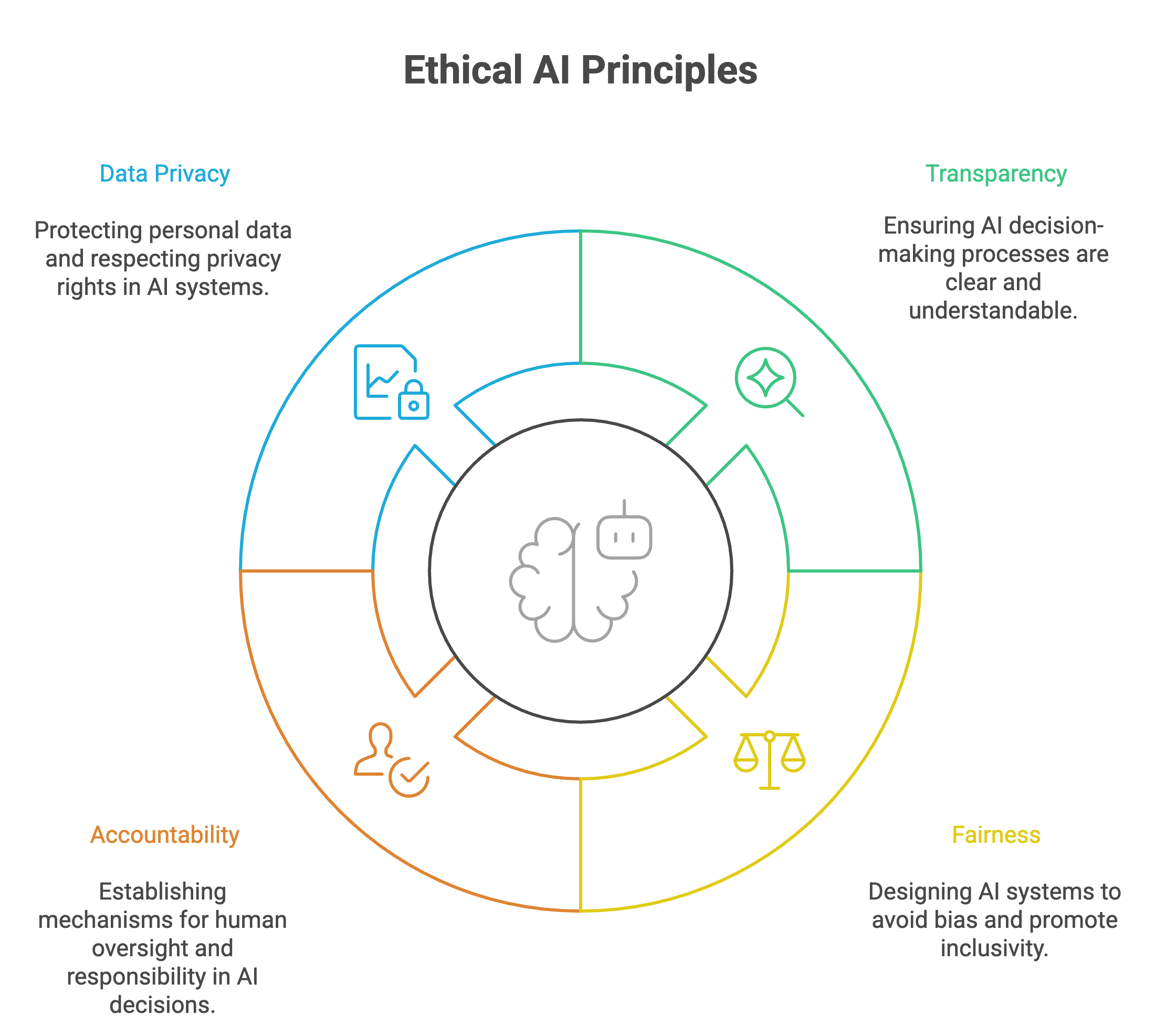

Translating ethical AI from theory into practice starts with a set of core principles. These guiding principles serve as a compass for organizations to design, deploy, and govern AI systems responsibly. There is no agreement on a common-set of ethical AI principles, but among the most widely cited we can find:

Source: turian

Transparency

The processes by which an AI makes decisions or predictions should be as clear as possible, either inherently or through tools that explain the AI’s reasoning. It involves explainability, so that stakeholders can trace how input data leads to outputs, and disclosure, so that people know when they are interacting with an AI (versus a human or traditional software). Regulators also emphasize transparency; the EU’s draft AI Act explicitly includes transparency requirements for certain AI systems, and UNESCO’s global AI recommendation cites “transparency and explainability” as fundamental preconditions for protecting human rights.

Fairness

Fairness means AI outcomes should be just, impartial, and inclusive. AI systems must be designed to avoid unfair bias against any group based on attributes like race, gender, age, or other protected characteristics. It also involves accessibility: ensuring AI benefits are accessible to diverse communities.

Accountability

Accountability means that there are mechanisms for humans to be responsible for AI behavior and outcomes. Even when AI automates a decision, a human organization should be answerable for that decision.

Data Privacy and Protection

This refers to safeguarding personal data and ensuring AI systems respect individuals’ privacy rights. AI often relies on vast amounts of data, some of it sensitive personal information. The ethical principle of privacy demands that this data be collected and used with consent, kept secure, and not exploited for purposes harmful to the individual. It also means being transparent with users about data usage and obtaining informed consent where appropriate. Another facet is protecting data from breaches: AI systems should have robust security, since a breach can violate many individuals’ privacy at once. Privacy is strongly backed by law, such as the EU’s General Data Protection Regulation (GDPR), one of the world’s strictest data protection laws.

{{cta="/cta/automate-quality-management-with-ai-agents"}}

Implementing Ethics for AI: A Practical Guide

As AI becomes increasingly integrated into business operations, implementing ethical AI practices is essential to ensure that AI systems are developed and used in ways that align with human values and societal norms. Leading organizations have established comprehensive frameworks to guide the ethical development and deployment of AI systems.

Some steps include:

1. Establish Clear Ethical Guidelines

Develop a set of ethical principles that align with your organization’s values and comply with regulatory requirements. Consider the principles we previously discussed to guide the entire AI lifecycle. By embedding these principles into organizational policies and practices, businesses can create a robust ethical framework that guides the responsible development and use of AI technologies.

2. Implement Robust Governance Structures

Create governance frameworks that oversee AI initiatives. Establish clear roles and responsibilities for AI governance, ensuring that ethical considerations are integrated throughout the AI development lifecycle. Implement regular audits, impact assessments, and oversight committees to review AI systems for potential biases and ensure compliance with ethical standards. Ensure to stay up to date with new compliance regulations and legislation, and update your frameworks accordingly.

3. Ensure Transparency and Explainability

Adopt tools and practices that make AI decisions understandable to stakeholders. Implement transparency measures by creating clear documentation of AI system design, data sources, and decision-making processes. Utilize explainability techniques to ensure that AI outputs can be interpreted and understood by users, fostering trust and accountability.

4. Prioritize Fairness and Mitigate Bias

Regularly audit AI systems to identify and address biases. Employ strategies such as pre-processing, in-processing, and post-processing techniques to mitigate bias in AI models. Ensure that training datasets are diverse and representative, and that algorithmic decisions do not disproportionately impact any particular group.

5. Maintain Human Oversight

Ensure that AI systems are designed to include human-in-the-loop mechanisms. Establish clear guidelines and responsibilities for human oversight within your organization. Implement human review processes for critical stages of AI output generation, especially in areas with high ethical sensitivity or where incorrect information could have significant consequences.

6. Foster Continuous Education and Awareness

Provide comprehensive training for your team. Equip employees with the knowledge and skills necessary to understand and address the ethical implications of AI technologies. Use a training curriculum that involves both general and basic issues, as well as role-specific training tailored to the needs of different departments. Promote continuous learning, and keep the training up to date.

Regulatory Landscape of AI Ethics

Around the world, regulators and policy-makers are racing to establish guidelines and laws to govern AI ethics. The regulatory landscape is evolving rapidly, with new proposals and frameworks emerging at international, national, and industry levels. Senior decision-makers need to be aware of these developments, as they will shape compliance obligations and best practices for AI use. Here we outline some of the key regulatory and governance initiatives addressing artificial intelligence ethics.

European Union AI Act

Perhaps the most significant regulatory effort to date is the European Union’s AI Act, poised to become the first comprehensive legal framework for AI. The AI Act (formally Regulation (EU) 2024/1689) is a sweeping law that takes a risk-based approach to AI oversight. It categorizes AI systems into risk levels: Unacceptable Risk AI, High-Risk AI, Limited Risk AI and Minimal Risk AI.

The goal of the EU AI Act is to ensure “trustworthy AI” that “guarantee[s] safety, fundamental rights and human-centric AI” while also encouraging innovation.

Authorities are in charge of AI surveillance, deployers should ensure human oversight and monitoring, and providers have a post-market monitoring system. The European AI Office and authorities of the EU member states are responsible for its implementation, supervision, and enforcement.

The goal of the EU AI Act is to ensure “trustworthy AI” that “guarantee[s] safety, fundamental rights and human-centric AI” while also encouraging innovation.

Source: European Commission

General Data Protection Regulation (GDPR) and Privacy Laws

Even before specific AI laws, data protection regulations have indirectly enforced certain ethical standards on AI. The EU’s GDPR, implemented in 2018, is the most prominent.

It regulates any AI that processes personal data of EU residents. Article 22 grants individuals the right not to be subject solely to automated decisions that produce “legal or similarly significant” effects and to demand human review or meaningful explanation. This provision discourages opaque, fully-automated scoring in credit, hiring, insurance and similar contexts.

{{cta="/cta/validate-certificates-instantly-with-ai-agents"}}

UNESCO Recommendation on the Ethics of AI

On the global stage, UNESCO (United Nations Educational, Scientific and Cultural Organization) achieved a milestone in November 2021 when all 193 member states adopted the Recommendation on the Ethics of Artificial Intelligence. This is a “first global standard-setting instrument on the subject” of AI ethics.

While not legally binding like a national law, it represents international consensus on AI ethics principles. The UNESCO Recommendation articulates ten overarching principles, which include many of the ones we discussed: transparency and explainability, fairness and non-discrimination, accountability, privacy, human oversight, and others.

Many firms are already updating its policies following UNESCO recommendations, for instance, SAP updated its AI Ethics Policy to align with the 10 UNESCO principles.

Other Notable Frameworks and Initiatives:

OECD AI Principles

The OECD (Organisation for Economic Co-operation and Development) adopted 5 AI principles in 2019, which have been endorsed by dozens of countries. These principles emphasize inclusive growth, human-centered values, transparency, robustness (safety) and accountability, along with a call for international cooperation on AI governance. The OECD principles influenced the EU and other approaches and led to the creation of the Global Partnership on AI (GPAI), an international body to collaborate on AI ethics and policy.

Ethics Guidelines for Trustworthy AI (EU)

Before the AI Act, an EU High-Level Expert Group released guidelines (2019) for Trustworthy AI, outlining 7 requirements: human agency and oversight, technical robustness and safety, privacy and data governance, transparency, diversity/non-discrimination/fairness, societal and environmental well-being, and accountability. These have been widely cited in industry and shaped the thinking behind the AI Act’s risk framework.

Industry Self-Regulation and Standards

Industry groups and standards bodies are also contributing. For example, the Institute of Electrical and Electronics Engineers (IEEE) has an ongoing initiative on Ethically Aligned Design and standards like IEEE 7001-2021 for transparency. The International Organization for Standardization (ISO) is developing AI-specific standards (ISO/IEC JTC 1/SC 42), including on AI risk management. Companies like Google, Microsoft, IBM, and others have published their AI ethics principles and even open-sourced toolkits for responsible AI (e.g., Google’s What-If tool for bias testing, IBM’s AI Fairness 360). While not regulations, these create a toolkit and some level of self-regulation expectation within the tech industry.

Other National AI Strategies and Laws

Beyond the EU, other governments are taking steps. The UK has proposed a lighter approach, issuing an AI governance framework that delegates oversight to existing regulators and emphasizes principles without a single AI Act.

The US, at a federal level, has not enacted comprehensive AI legislation yet, but the White House has released a Blueprint for an AI Bill of Rights (outlining principles like safe and effective systems, algorithmic discrimination protections, data privacy, notice and explanation, and human alternatives/backup), which is more of a policy guide than enforceable law as of now.

China has issued ethical guidelines for AI and implemented rules for specific AI applications (such as recommender systems and deepfakes) that mandate transparency and prohibit certain behaviors (with a focus on social stability). Many other countries (Canada, Australia, Singapore, Brazil, etc.) have AI ethics or responsible AI frameworks in the works or released.

Sectoral Regulations

Certain industries have specific guidelines:

- Healthcare: Organizations like the World Health Organization (WHO) issued guidance on Ethics & Governance of AI in Health (2021), stressing patient safety, responsibility, and inclusion.

- Finance: The financial industry is heavily regulated for fairness and transparency, and regulators are paying attention to AI in credit scoring, trading, etc. In the EU, the AI Act will classify many finance AI systems as high-risk.

- Defense: Even the military domain has “AI ethics” discussions. NATO and the U.S. Department of Defense have adopted AI ethics principles for the use of AI in warfare (emphasizing responsibility, traceability, reliability, governability), to ensure human control over lethal decisions, for instance.

Given this landscape, it’s clear that global regulatory momentum is toward more oversight of AI to ensure it aligns with ethical norms. For organizations, this means that incorporating AI ethics into workflows isn’t just a nice-to-have but soon a must-have from a compliance perspective.

Key takeaways for leaders:

- Ensure your AI initiatives have legal counsel or compliance officers involved early, to navigate requirement. For multinational companies, aim to meet the highest-standard regulations, as these tend to become global baselines.

- Keep an eye on regulatory updates in all jurisdictions where you operate or have users. Laws can vary; for example, AI used in hiring in Illinois (USA) requires notice and consent, New York City has a law on bias audits for AI hiring tools, etc.

- Participate in industry groups or public consultations where possible. Many regulators seek industry input on AI rules: active engagement can give insight and help shape balanced policies.

- Treat ethical principles as compliance requirements. Document fairness and risk testing, maintain transparency records (e.g., how your AI was trained, what data), and establish oversight committees. These efforts not only fulfill ethical duty but also generate evidence you may need to demonstrate compliance.

How turian Helps You Ensure Ethical AI Workflows

turian recognizes that ethical AI is fundamental to delivering value and maintaining trust with its clients. turian’s platform is designed with ethical principles at its core, ensuring that organizations can automate workflows securely, transparently, and fairly. Here’s how turian addresses AI ethics across its solutions and helps you govern AI-powered workflows for secure automation:

- Our platform incorporates human-in-the-loop mechanisms to ensure human oversight in critical workflow automation. This synergy of AI speed with human discernment means your workflows remain reliable and accountable.

- Making AI operations transparent and auditable, so nothing is hidden from the users.

- turian follows strict data standards and executes tests to proactively address bias and unfairness in AI outputs.

- Many of turian’s use cases involve processing business data, which can include personal data about employees, customers, or suppliers (emails, purchase orders, compliance documents, etc.). We have engineered a platform with robust privacy protections: the data that flows into our AI agents is handled under strict access controls and encryption, we ensure to be compliant with regulations like GDRP, and our platform provides features to facilitate consent management and honors user rights, among other security and privacy measures.

- Our tools provide governance tools to clients: they include admin controls and review workflows that let organizations set their own rules for the AI’s operation.

- Our solutions are continually updated to incorporate the latest best practices in AI ethics and comply with new regulations.

Conclusion

As we embrace the power of AI, we must equally embrace the responsibility to guide it with an ethical compass. Ethics for AI is not a passive consideration: it is an active governance challenge that organizations must rise to meet.

The mandate is clear: champion ethical AI as a business imperative. Ask the tough questions about AI projects (“Could this decision process be unfair? How do we explain it? Are we compliant with laws and our values? Who takes responsibility if something goes wrong?”) and ensure satisfactory answers before proceeding. Foster a culture where ethics is everyone’s business, and choose partners and platforms, like turian, that embed ethical principles into their technology.

AI is here to stay, and its role will only grow. By governing AI with wisdom and integrity, we can harness its immense potential for productivity and innovation while upholding the secure, fair, and human-centric practices that our businesses and societies depend on. Ethical AI is not just “doing the right thing”: it’s doing the smart thing for long-term prosperity. As we automate workflows and empower AI agents, let’s do so with eyes wide open to the ethical dimensions, ensuring that automation is secure, trustworthy, and beneficial to all. With the right frameworks and partners in place, AI can truly be a force for good in your organization, driving success and exemplifying the values you stand for.